Docker

This guide provides a complete demonstration of how to install and configure Docker and NVIDIA Container Runtime on NVIDIA Jetson Orin series devices. This is a critical step for running GPU-accelerated containers (such as AI inference applications like Ollama, n8n, ROS, etc.).

1. Overview

- Install Docker CE to support containerized applications

- Configure NVIDIA runtime to enable GPU acceleration

- Set up non-

sudomode for running Docker - Configure NVIDIA as the persistent default runtime

This guide covers:

- Docker installation

- NVIDIA runtime configuration

- Runtime testing

- Common issue troubleshooting

2. System Requirements

| Component | Requirement |

|---|---|

| Jetson Hardware | Orin Nano / NX / AGX |

| OS | Ubuntu 20.04 or 22.04 (JetPack-based) |

| Docker Version | Recommended Docker CE ≥ 20.10 |

| NVIDIA Runtime | nvidia-container-toolkit |

| CUDA Driver | Included in JetPack (requires JetPack ≥ 5.1.1) |

3. Install Docker CE

Install Docker from the official Ubuntu repository:

sudo apt-get update

sudo apt-get install -y docker.io

⚠️ To install the latest version, you can also use Docker's official APT repository.

Verify Docker installation:

docker --version

# Example output: Docker version 20.10.17, build 100c701

4. Run Docker in Non-sudo Mode (Optional)

To run Docker commands as a regular user:

sudo groupadd docker # Create docker group (skip if already exists)

sudo usermod -aG docker $USER

sudo systemctl restart docker

🔁 Reboot or log out and back in for changes to take effect:

newgrp docker

5. Install NVIDIA Container Runtime

Install the container runtime to allow containers to access Jetson GPU:

sudo apt-get install -y nvidia-container-toolkit

6. Configure NVIDIA Docker Runtime

A. Register NVIDIA as a Docker Runtime

Run the configuration command:

sudo nvidia-ctk runtime configure --runtime=docker

Ensure NVIDIA is registered as a valid container runtime.

B. Set NVIDIA as the Default Runtime

Edit the Docker daemon configuration file:

sudo nano /etc/docker/daemon.json

Paste or confirm the following JSON content exists:

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia"

}

Save and exit the editor.

C. Restart Docker Service

Apply configuration changes:

sudo systemctl restart docker

Verify Docker has enabled NVIDIA runtime:

docker info | grep -i runtime

Example output should include:

Runtimes: io.containerd.runc.v2 nvidia runc

Default Runtime: nvidia

D. Login to nvcr.io

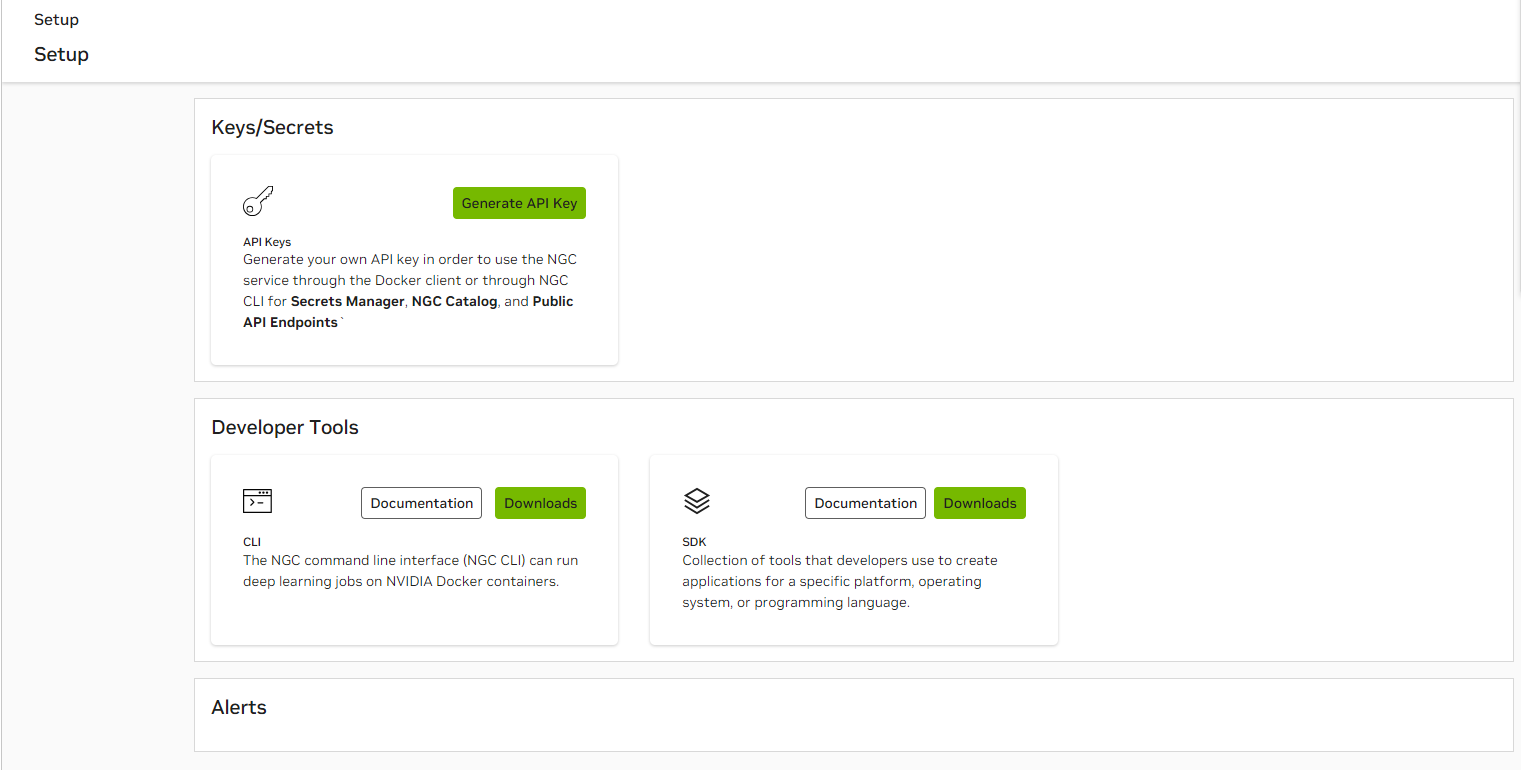

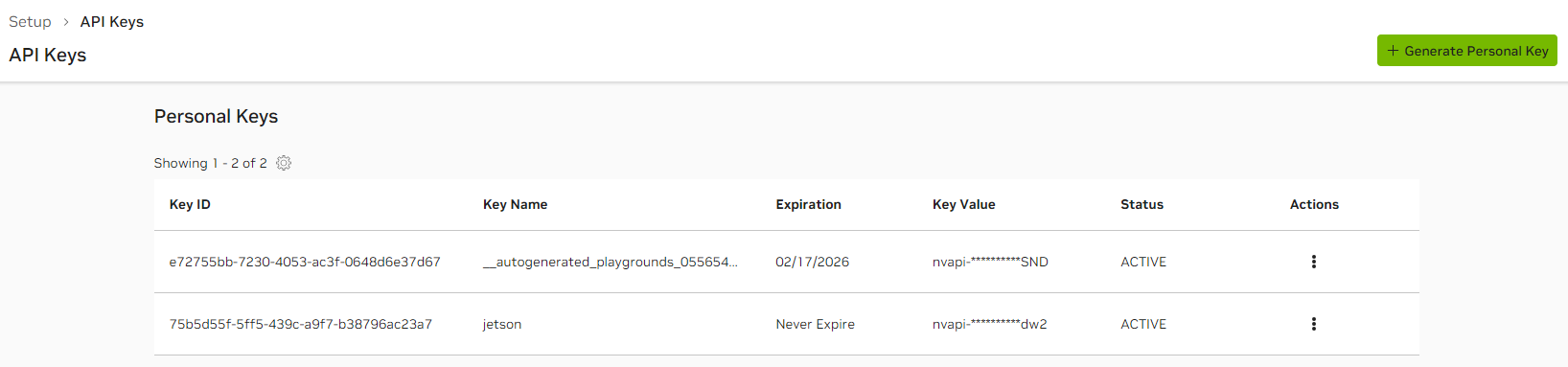

Obtain NGC_API_KEY

- Generate API Key

- Generate Personal Key

- Docker login

sudo docker login nvcr.io

# Username is fixed: $oauthtoken

Username: "$oauthtoken"

# Password is the token

Password: "YOUR_NGC_API_KEY"

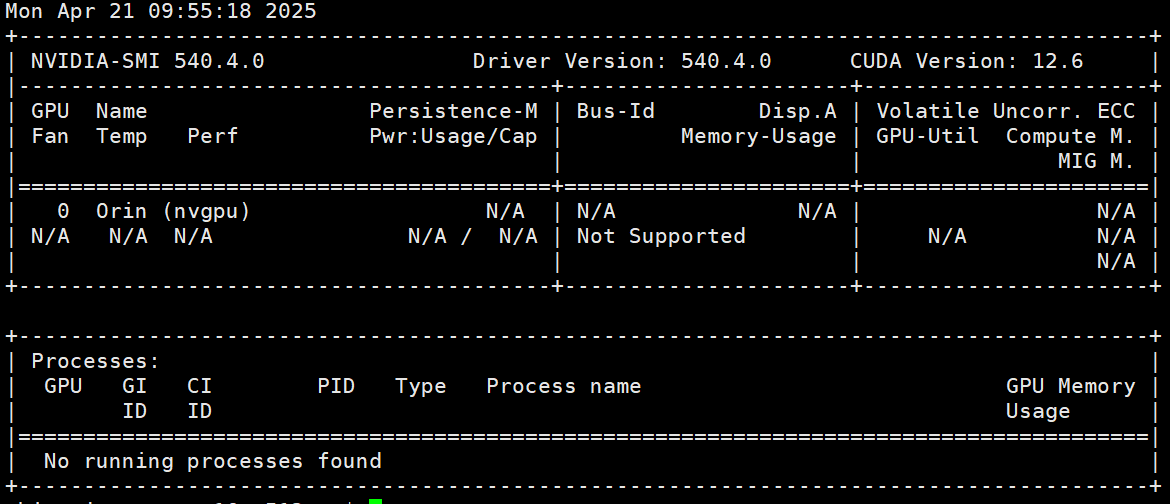

7. Test GPU Access in Containers

Run the official CUDA container to test GPU availability:

docker run --rm --runtime=nvidia nvcr.io/nvidia/l4t-base:r36.2.0 nvidia-smi

Expected output:

- Displays CUDA version and Jetson GPU information

- Confirms the container has successfully accessed the GPU

You can also use the community-maintained jetson-containers to quickly set up your development environment (recommended)

8. Tips and Troubleshooting

| Issue | Solution |

|---|---|

nvidia-smi not found | Jetson uses tegrastats as an alternative |

| No GPU in container | Ensure default runtime is set to nvidia |

| Permission denied | Check if user is in the docker group |

| Container crashes | Check logs: journalctl -u docker.service |

9. Appendix

Key File Paths

| File | Purpose |

|---|---|

/etc/docker/daemon.json | Docker runtime config |

/usr/bin/nvidia-container-runtime | NVIDIA runtime binary path |

~/.docker/config.json | Docker user config (optional) |